bagging machine learning explained

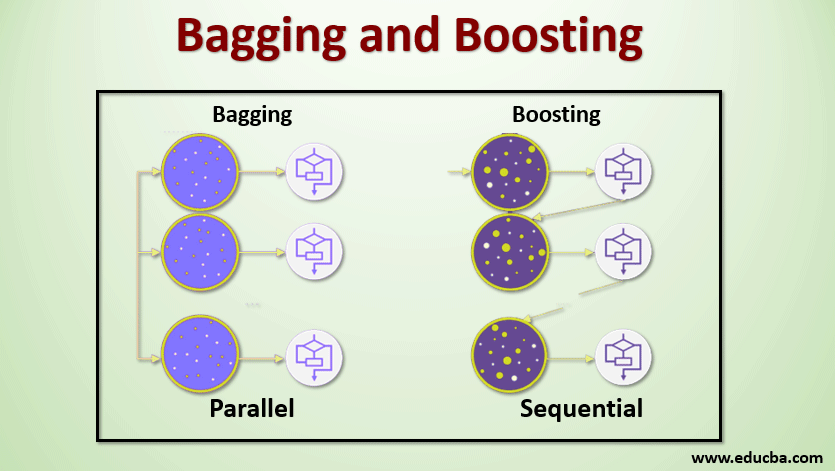

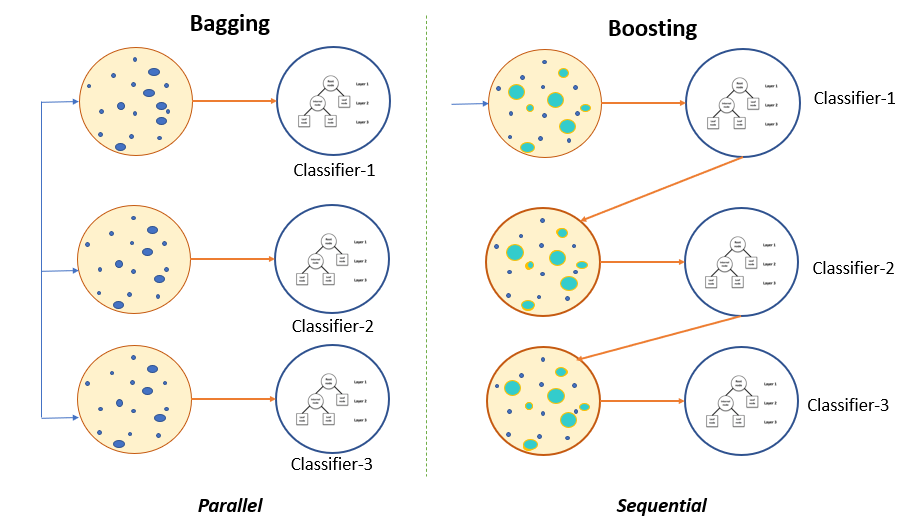

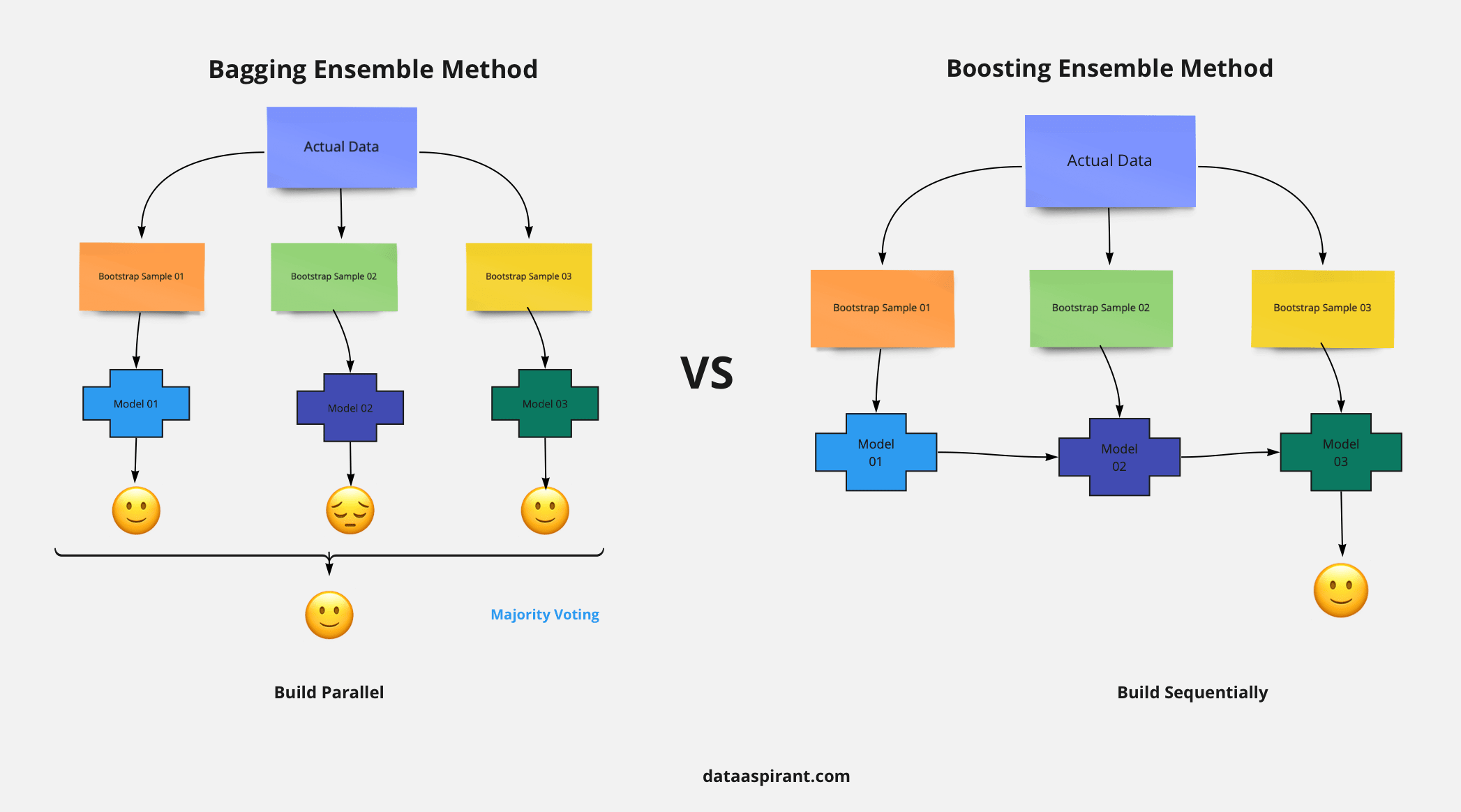

Bagging and Boosting are similar in that they are both ensemble techniques where a set of weak learners are combined to create a strong learner that obtains better performance than a single one. Produce industry-level projects to make your professional portfolio stand out.

Bagging In Financial Machine Learning Sequential Bootstrapping Python Example

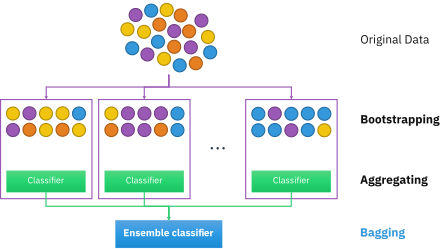

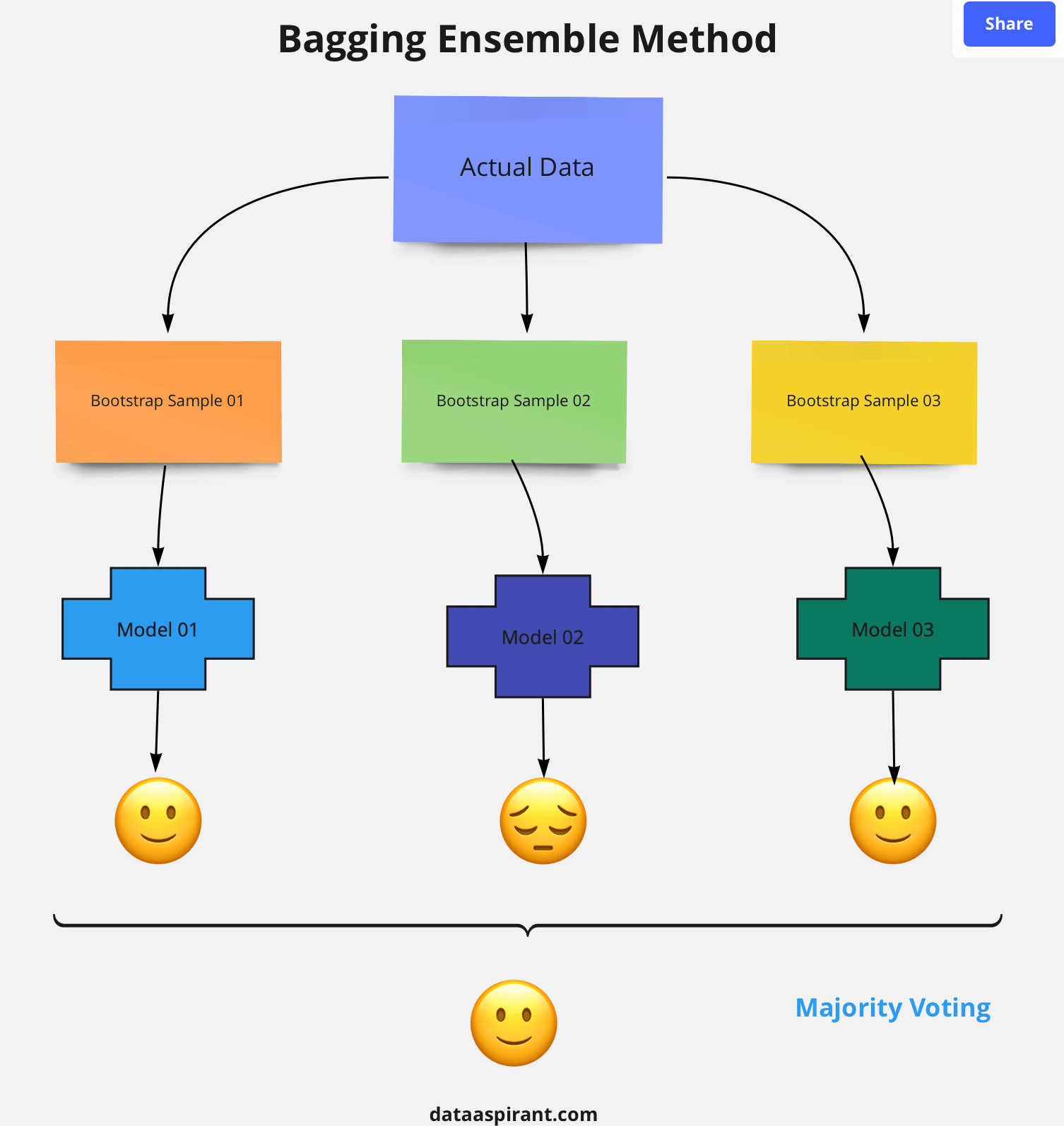

Aggregation in Bagging refers to a technique that combines all possible outcomes of the prediction and randomizes the outcome.

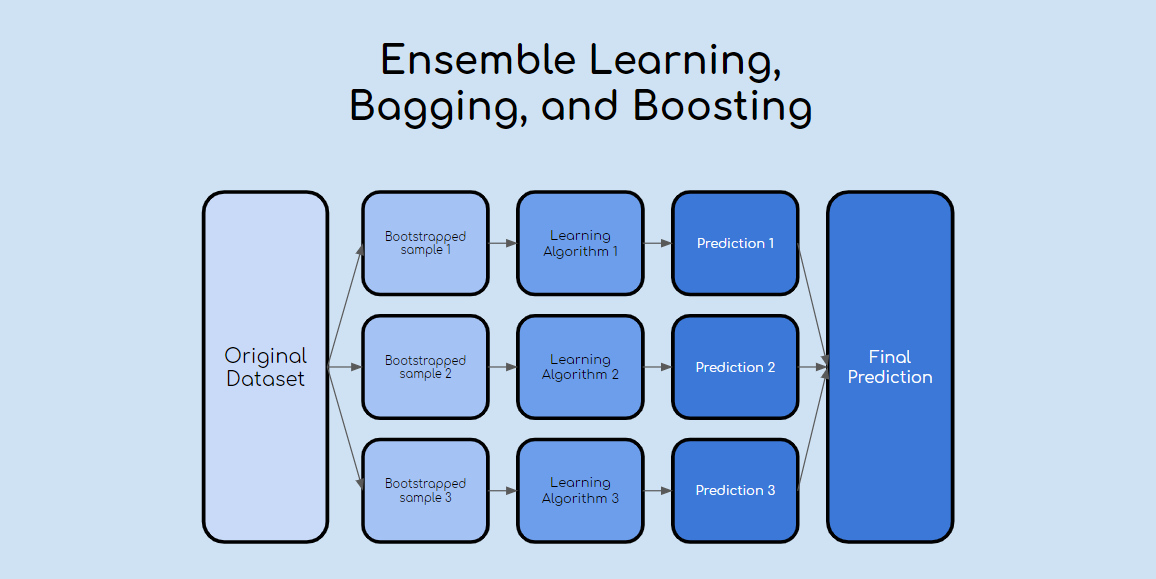

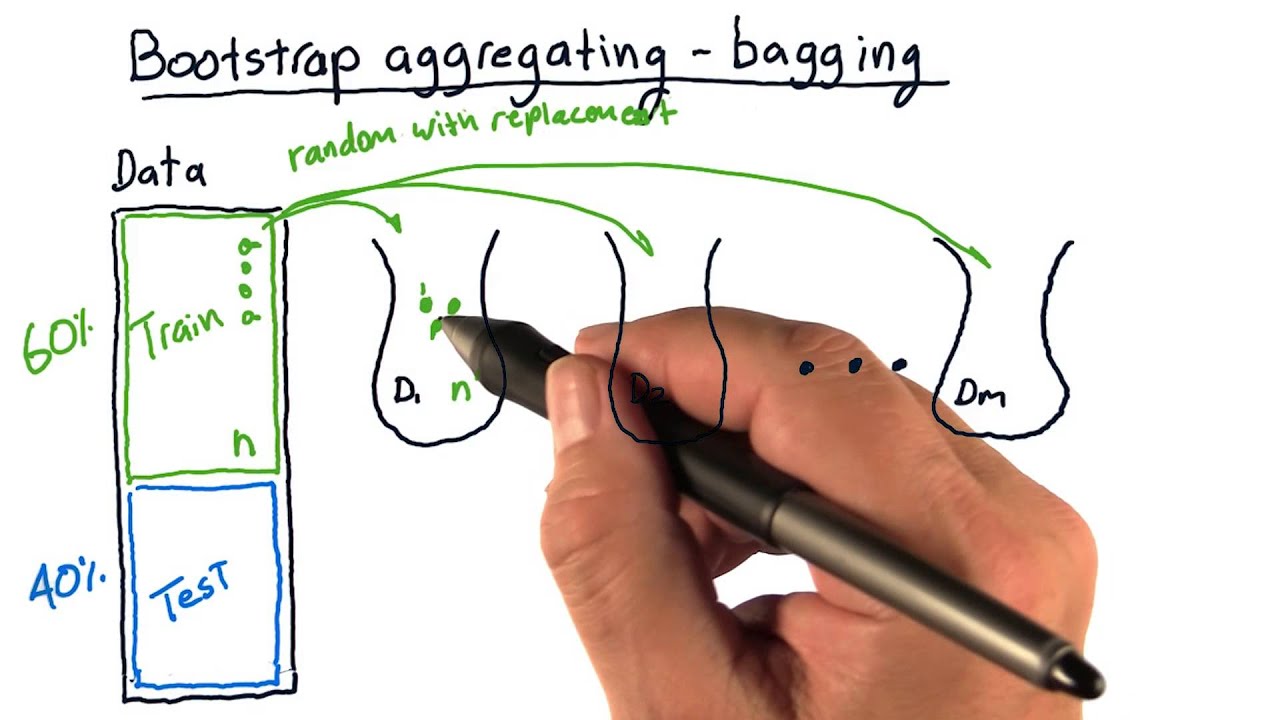

. Bagging stands for B ootstrap Agg regating or simply Bootstrapping Aggregating. Then like the random forest example above a vote is taken on all of the models outputs. Bagging is a powerful method to improve the performance of simple models and reduce overfitting of more complex models.

As seen in the introduction part of ensemble methods bagging I one of the advanced ensemble methods which improve overall performance by sampling random samples with replacement. It helps in reducing variance ie. Bagging techniques are also called as Bootstrap Aggregation.

Ad Sign up for our Data Scientist course right now and get a full money-back guarantee. Bagging Process What is Boosting. It provides stability and increases the machine learning algorithms accuracy that is used in statistical classification and regression.

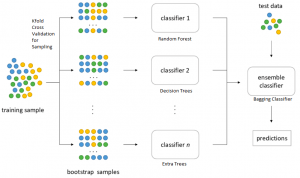

Bagging and pasting are techniques that are used in order to create varied subsets of the training data. Strong learners composed of multiple trees can be called forests. Bagging is the application of the Bootstrap procedure to a high-variance machine learning algorithm typically decision trees.

Bagging is similar to Divide and conquer. Random forests Learning trees are very popular base models for ensemble methods. Bagging short for bootstrap aggregating creates a dataset by sampling the training set with replacement.

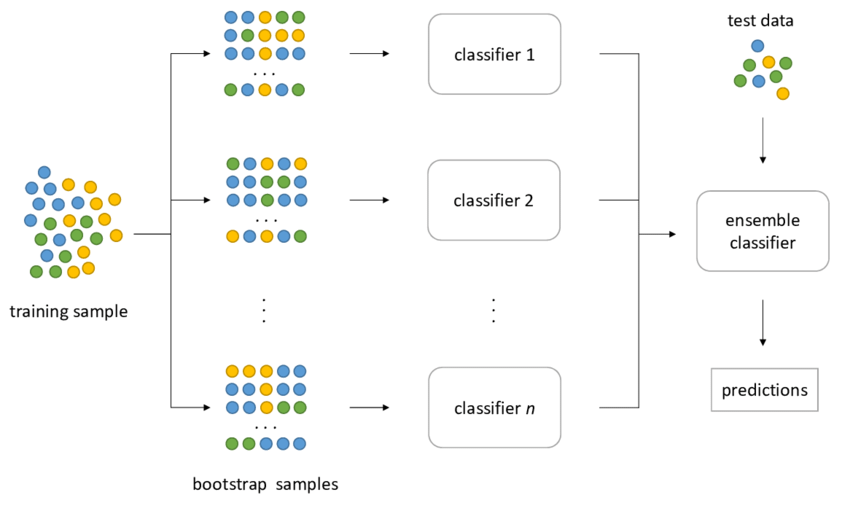

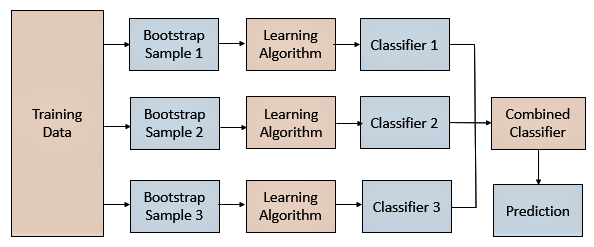

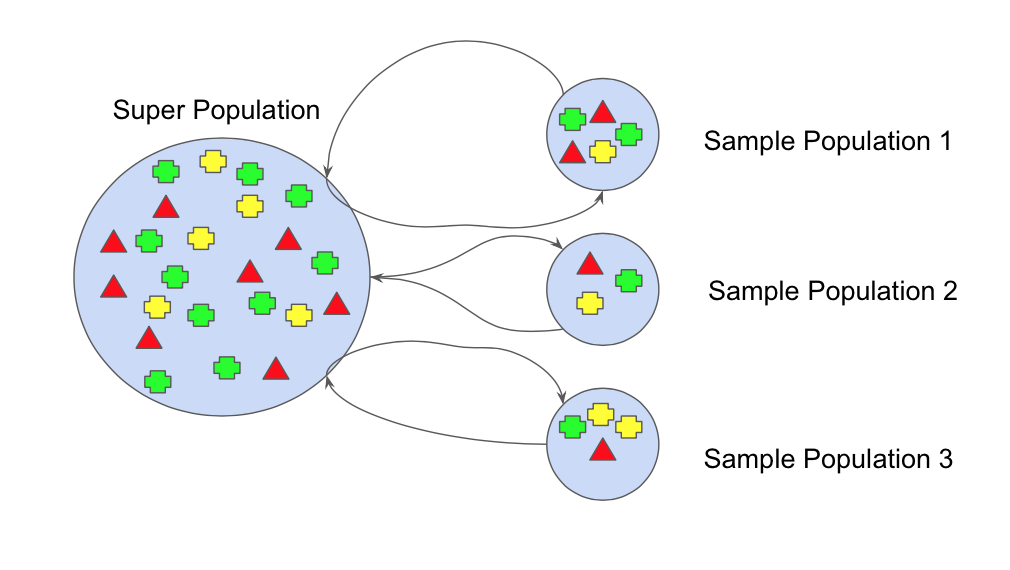

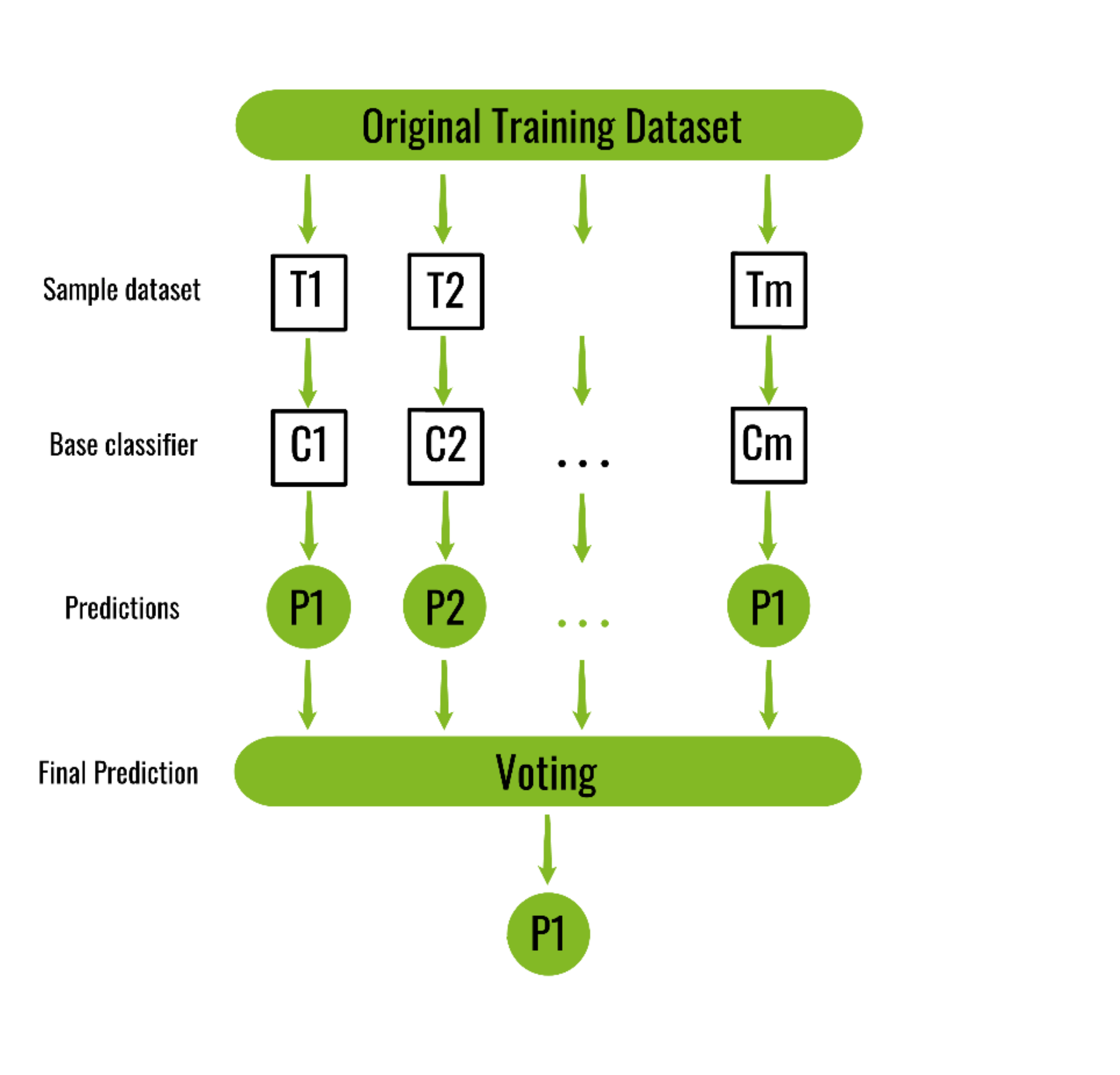

Bootstrap AGGregatING Bagging is an ensemble generation method that uses variations of samples used to train base classifiers. This is repeated until the desired size of the ensemble is reached. A Bagging classifier is an ensemble meta-estimator that fits base classifiers each on random subsets of the original dataset and then aggregate their individual predictions either by voting or by averaging to form a final prediction.

It decreases the variance and helps to avoid overfitting. Bagging B ootstrap A ggregating also knows as bagging is a machine learning ensemble meta-algorithm designed to improve the stability and accuracy of machine learning algorithms used in statistical classification and regression. Bootstrapping in Bagging refers to a technique where multiple subsets are derived from the whole set using the replacement procedure.

So lets start from the beginning. It is a group of predictive models run on multiple subsets from the original dataset combined together to achieve better accuracy and model stability. Learn More about AI without Limits Delivered Any Way at Every Scale from HPE.

The principle is very easy to understand instead of fitting the model on one sample of the population several models are fitted on different samples with replacement of the population. The biggest advantage of bagging is that multiple weak learners can work better than a single strong learner. Bagging also known as bootstrap aggregating is the process in which multiple models of the same learning algorithm are trained with bootstrapped samples of the original dataset.

Bagging generates additional data for training from the dataset. Here it uses subsets bags of original datasets to get a fair idea of the overall distribution. For each classifier to be generated Bagging selects with repetition N samples from the training set with size N and train a base classifier.

It is usually applied to decision tree methods. Lets assume we have a sample dataset of 1000 instances x and we are using the CART algorithm. Bagging is an acronym for Bootstrap Aggregation and is used to decrease the variance in the prediction model.

The subsets produced by these techniques are then used to train the predictors of an ensemble. Ad Easily Build Train and Deploy Machine Learning Models. Bagging and Boosting are both ensemble methods in Machine Learning but whats the key behind them.

Bagging and pasting. What is Bagging. Bagging is a parallel method that fits different considered learners independently from each other making it possible to train them simultaneously.

Bagging consists in fitting several base models on different bootstrap samples and build an ensemble model that average the results of these weak learners. Ad Accelerate Your Competitive Edge with the Unlimited Potential of Deep Learning.

What Is Bagging In Machine Learning And How To Perform Bagging

Bagging Bootstrap Aggregation Overview How It Works Advantages

Bootstrap Aggregating Wikipedia

Bagging And Boosting Most Used Techniques Of Ensemble Learning

Ensemble Methods Techniques In Machine Learning Bagging Boosting Random Forest Gbdt Xg Boost Stacking Light Gbm Catboost Analytics Vidhya

Bootstrap Aggregating Wikiwand

Bagging Classifier Python Code Example Data Analytics

Ensemble Methods In Machine Learning Bagging Versus Boosting Pluralsight

Ensemble Learning Bagging And Boosting Explained In 3 Minutes

Illustrations Of A Bagging And B Boosting Ensemble Algorithms Download Scientific Diagram

Bagging And Boosting Explained In Layman S Terms By Choudharyuttam Medium

Bootstrap Aggregating Bagging Youtube

Ensemble Learning Bagging And Boosting By Jinde Shubham Becoming Human Artificial Intelligence Magazine

Ensemble Methods Bagging Vs Boosting Difference

Bagging Vs Boosting In Machine Learning Geeksforgeeks

Ensemble Methods Bagging Vs Boosting Difference

Ml Bagging Classifier Geeksforgeeks

Ensemble Learning Explained Part 1 By Vignesh Madanan Medium